DataOps Principle #16 - Monitor Quality and Performance

For many of us we live in an E3/G3 world. So, what does that mean? Well, it means the licensing model for Microsoft 365 gives us access to other Power Platforms components in the Microsoft 365 ecosystem besides Power BI. One of the most versatile is Power Automate.

Since our teams may not always have access to the Azure resources or on-premise SQL data tools (or a Premium license for that matter), this leads our customers and our teams to leverage Power Automate for some interesting implementations like:

1) Scheduling refreshes - If we're working with Power BI premium capacities, Power Automate allows us to schedule more than the 30-minute refreshes provided with the dataflow and dataset scheduling interface found in the Power BI service. Now, to be clear, we use that capability knowing full well we could easy tax the capacity, but if the time to refresh is in small windows and/or we’re leveraging incremental refreshes, Power Automate offers a great approach to reducing the time to deliver data to our customers.

2) Deliver Content - With a Power Automate connector to Power BI, you can deliver reports and notifications directly to customers making their experience with a data analytics solution that much better.

3) E3LT – So, I am being cheeky here as Power Automate can subsitute some jobs that would normally be scripted and ran in Azure Data Factory or a SQL Agent. In the past, I have seen it used for things like copying files from one location to another or extracting Planner data (really, no native connector yet?). Ultimately, these tasks serve to put data in a location for Power BI to ingest and transform.

4) Making Data Actionable - With the introduction of the Power Automate visual, you can give customers within a Power BI report the ability to kick off flows using the context provided by Power BI. The applications of the visual are wide ranging, but I’ve seen it used to export data, send notifications, and kick of other processes in SharePoint.

With there being so many use cases for Power Automate to bolster a data analytics project, how do we monitor for quality and performance?

DataOps Principle #16: Our goal is to have performance, security and quality measures that are monitored continuously to detect unexpected variation and generate operational statistics.

By adding Power Automate we add more steps in the data analytics manufacturing line, and DataOps stresses that we should be monitoring these steps. So, given a solutions’ reliance on Power Automate, here are some things to put your project in a better position for monitoring:

1) Ownership with a Group - To know when a flow fails you need to have access to the flow. By creating a group and sharing ownership you can give those in the group the ability to view run history and update connections used in those flows.

2) Run Flow with a "Service Account" - If possible, you can use a non-user with a E3/G3 license to have the appropriate connections in your flow. This avoids running steps in a flow with connections associated with individuals that may lose permissions as they leave projects or teams. If the use of a service account is not possible then the next best practice is crucial.

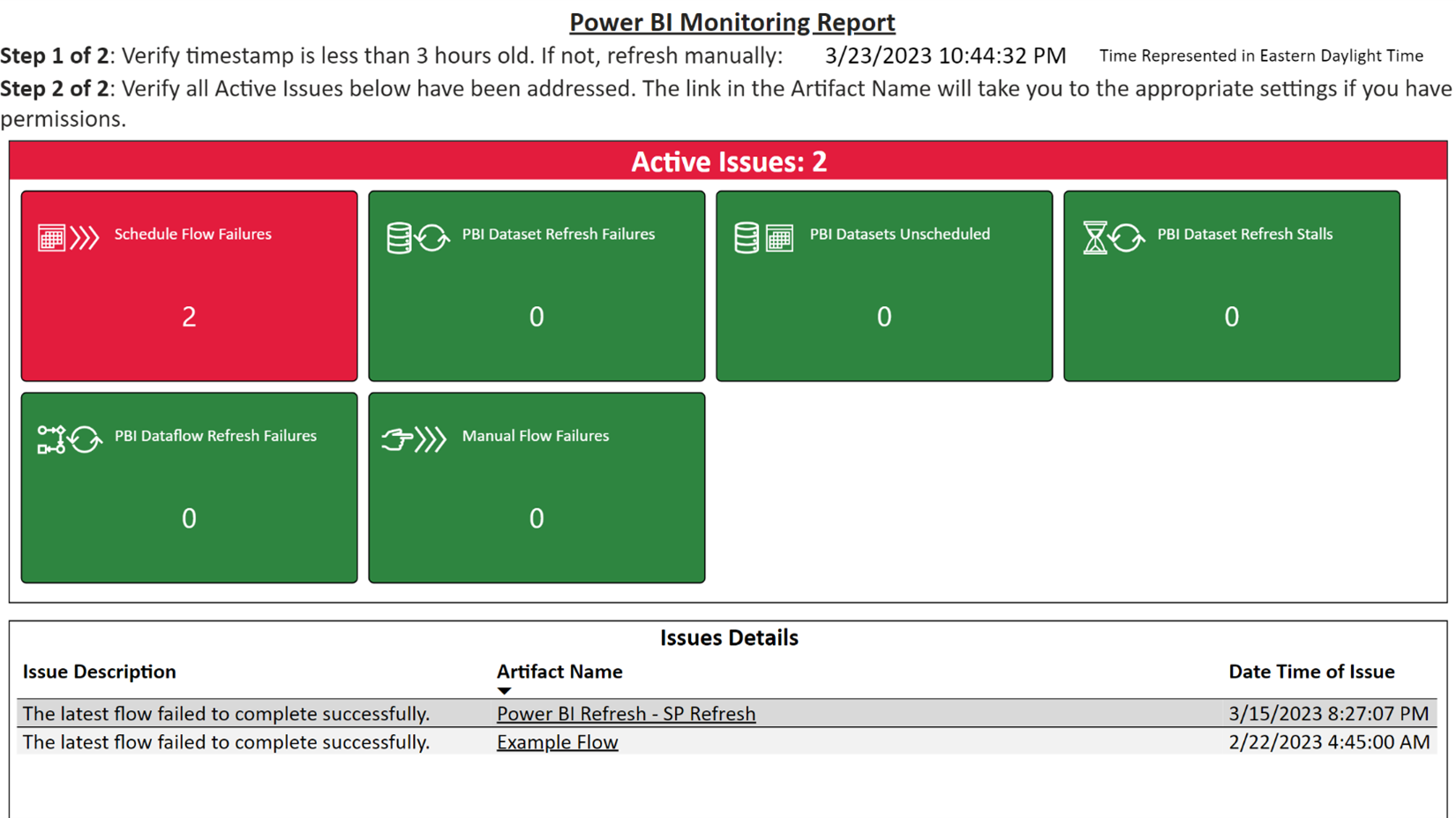

3) Centralize Monitoring - In Part 24, I introduced a template for monitoring datasets with a custom connector. Since that time, Luke Barker and I have collaborated on a custom connector which can pull information about Power Automate flows and their run history. Subsequently, I have a new template (see Figure 1) that centralizes the monitoring of datasets, Power BI dataflows, and Power Automate flows in one report. You can download the template by following the instructions here.

And for GCC customers, you'll be happy to know there is a template for you as well at the link provided.

Figure 1 – Example of the latest Power BI Monitoring Report

Luke and I continue to come up with ideas on better monitoring, so stayed tuned for updates here and on LinkedIn/ Twitter. We're already experimenting to see if we can identify flows that may have expiring connections in advance.

In the meantime, to have better monitoring, if you could vote for these Power BI ideas, I’d appreciate it: